Evaluate the Quality of a Video Dataset for Machine Learning

Introduction

A video dataset is a collection of video clips and related information that is used for training and testing machine learning models for video analysis tasks. The video clips in a video dataset can be broken down into individual frames or images, which are used as input to train machine learning models for tasks such as action recognition, object detection, and video captioning. The quality and diversity of the video dataset are critical for the performance of the machine learning models. A video dataset should be diverse and representative of the target domain, with a sufficient number of samples for each class. The video clips should also be of high quality, with adequate resolution, lighting, and frames per second (fps).

Annotated video datasets, where each video clip is labeled with information such as action classes, object classes, and captions, are particularly useful for training supervised machine learning models. Video Dataset can also be augmented with additional data, such as optical flow information and audio, to provide additional information for the machine learning models to learn from.

Video Dataset for machine learning

There are several video datasets that can be used for machine learning and deep learning tasks. Here are some popular ones:

UCF101: This is a large video dataset of 101 action categories, collected from YouTube videos, and is often used for human action recognition tasks.

HMDB51: This dataset consists of 51 action categories, including actions performed by a single person, with realistic background and illumination variations.

Kinetics: This is a large-scale, high-quality dataset of YouTube video URLs with a total of 400 action classes. It is used for tasks such as action recognition, lip-reading, and visual speech recognition.

Something-Something: This dataset consists of 174,000 short trimmed videos of a single action performed by a person, annotated with 174 action categories.

YouTube-8M: This is a large-scale video classification dataset that consists of 8 million YouTube video URLs, labeled with a vocabulary of over 6,000 visual entities.

Benefits of outsourcing video dataset collection

Time-saving: Outsourcing the collection process can save a significant amount of time, as a dedicated team can be focused on collecting and annotating the data, freeing up time for other tasks.

Cost-effectiveness: Outsourcing the collection process can be cost-effective, as the cost of hiring a team and purchasing the necessary equipment and software can be spread over multiple projects.

Access to expertise: A professional data collection and Video Annotation team will have experience and expertise in collecting and annotating data, ensuring high-quality data is collected and annotated in a consistent and efficient manner.

Scalability: Outsourcing the collection process can provide the flexibility to scale up or down the size of the dataset, depending on the needs of the project.

Improved data quality: A professional data collection and annotation team will have a systematic and standardized approach to collecting and annotating data, leading to improved data quality and

consistency.

Some live examples of Video Dataset based AI models

Yes, here are some examples of real-world AI models that use video datasets:

Action recognition: One example of an AI model that uses video datasets for action recognition is the two-stream Convolutional Neural Network (CNN) model, which uses both RGB and optical flow information from videos to recognize human actions. This model is trained on large video datasets such as UCF101 and HMDB51.

Visual speech recognition: An AI model that uses video datasets for visual speech recognition is the LipNet model, which is trained on the GRID dataset to recognize speech from the movements of a person's lips. This model can be used for tasks such as lip-reading and speech recognition in noisy environments.

Video captioning: An AI model that uses video datasets for video captioning is the Video-Captioning Transformer model, which generates captions for videos based on their content. This model is trained on large video datasets such as MSR-VTT and ActivityNet Captions.

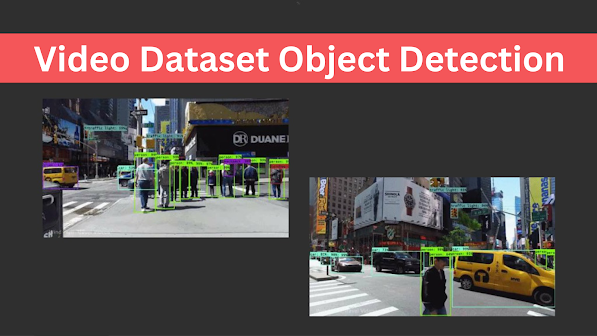

Video object detection: An AI model that uses video datasets for video object detection is the two-stage Faster R-CNN model, which uses both a region proposal network and a detection network to detect objects in videos. This model is trained on large video datasets such as ImageNet-VID and YouTube-BB.

Video summarization: An AI model that uses video datasets for video summarization is the VideoBERT model, which generates a summary of a video based on its content. This model is trained on large video datasets such as HowTo100M and MSVD.

Global Technology Solutions can help with Video Dataset mining

Yes, Global Technology Solutions (GTS) can help with video dataset mining, as they have experience and expertise in developing Dataset For Machine Learning solutions for various industries. Data collection and annotation: GTS can help collect and annotate a large and diverse video dataset for machine learning tasks, such as action recognition, visual speech recognition, and lip-reading.

Data pre-processing and cleaning: GTS can help clean, pre-process and prepare the video dataset to make it ready for machine learning models.

Data augmentation: GTS can help augment the video dataset to improve the performance of machine learning models, for example, by adding artificial noise or flipping the video frames.

Model development and deployment: GTS can help develop and deploy machine learning models on the video dataset, using various deep learning frameworks such as TensorFlow, PyTorch, and Keras.

Model evaluation and optimization: GTS can help evaluate and optimize the performance of the machine learning models on the video dataset, using metrics such as accuracy, precision, recall, and F1-score.

.png)

Comments

Post a Comment