Video Classification using Machine Learning

INTRODUCTION

Human Behavior Analysis (HBA) is an important area of research in artificial intelligence. It encompasses a variety of fields of application, including video monitoring, environmental-assisted living and smart shopping environments etc. The supply for human video data is rapidly growing through the assistance of the leading companies in this sector. From the viewpoint of DL this task is similar to HBA. Due to the increase in computing power, Deep Learning techniques have been an important step in the context of classification in the past few years. Strategies employed include convolutional Neural Networks (CNNs) to help with image comprehension and RNNs for short-term comprehension such as video as well as text. In the following sections, we will discuss the various strategies in depth. 1.1 Deep Learning Deep learning is a machine-learning branch that makes use of advanced multiple-level "deep" neural networks to create systems that can recognize patterns from huge quantities of data.

Deep Learning

Deep learning is a machine learning branch that uses advanced, multi-level "deep" neural networks to build systems capable of detecting features from vast quantities of training data that is not labeled. Because of the superior performance over conventional methods like machine learning, Bayesian networks, etc. There has been an increase in interest in the past decade in the application for deep-learning. In the last few years, the growth in computational capacity has made possible to look at biologically-inspired algorithms that were developed long ago.

Convolutional Neural

Network Convolutional Neural Networks are a specific kind of Artificial Neural Networks specifically designed to process a huge amount in input information (images audio, images, or video). Because of the huge amount of input Dataset For Machine Learning, it would be extremely inefficient to eliminate the functionality of a typical Fully Connected (FC) network. In broad terms the way CNN does is to reduce the amount of detail by analyzing each region of data to find particular features. CNNs are built in filters (kernels) that work like that of the weights used by the Completely Connected. The only difference from those weights in the FC is that one convolutional filter is distributed across all input regions in order to create one output. This is called Local Receptive Fields, and the reduction in the amount of weights CNN will be able to recognize is advantageous. The method for measuring output involves sliding the filter across the input. The difference of each element in the kernel as well as the input element it is overlapping is calculated at every position. The items are combined to produce the amount of production that is at the present location. The increase in the size of map of features is an typical method of reducing in the quantity of variables as well as processing time required in the CNN. The pooling layer operates independently (normally after the convolutional layer) and plays the highest or average part of the feature map to narrow the area. A highly effective method of finding features is to make use of the combination of several convolutional and pooling layers working in parallel. This is because different kernel sizes can be executed in parallel, which allows basic and more complex functions to be detected at various levels within the system. The first network that implemented parallelism was AlexNet (2012). Google Inception Network is a extremely accurate Deep Neural Network for image recognition. It incorporates multiple layers into an element called "Inception Module.

Recurrent Neural Network

The above portrayed techniques are meant to arrange autonomous information. But is it possible to deal with time-arrangement data? To meet these requirements an alternative type or neural structure was created to display time sequence information. These are known as Recurrent Neural Systems (RNNs) which allow the data to remain in place through loops .In this case, we have the input as a stream, xt and a stream output . In each iteration, and the outputs, is an additional input to the next iteration. The basic RNNs are used to represent smaller temporal dependences. In the case of long sequences of data (in the majority of real instances) an alternative variety of RNN known as Long-Short-Term Memory Networks is used.

LSTM Networks

LSTM networks were created in 1997 by Hochreiter & Schmidhuber (1997) They are models that are based on RNNs and capable of learning long-term dependencies. Vanilla RNNs have an extremely basic structure, similar to one perception that has a single activated tanh layer. The the LSTM cells possess a more complex structure. Rather than having a single layer (tanh) there are four layers, which interact in an extremely unique manner. Video analysis offers more details for recognition, by adding a temporal dimension, from which further uses can be made of motion as well as other data. In the same time the process is more difficult computationally when processing small video clips as each video could contain hundreds or hundreds of frames and not all of them are useful. An unwise approach is to look at video frames as static images and then apply CNNs to help identify each frame as well as the average video degree predictions. But, as each video frame only represents just a tiny portion of the overall story of the video this method could result in using unreliable information and could lead to confusion between groups, especially when the fine-grained distinctions or parts of the video are not related to the actions. Therefore, we believe that gaining a general understanding of the time-based evolution of the video is crucial to ensure that Video Dataset is classified correctly. From a model perspective, this is not easy since we need to model variables length videos using an undetermined amount of variables.

The primary goal in this endeavor is create and implement a powerful deep learning system that can identify and classify human behavior in two categories, which are safe Activity and dangerous Activity by combining CNNs as well as RNNs architectures.

Related Work

The last decade was a significant time when researchers could share numerous deep-net-based and hand-crafted techniques to recognize actions. The earlier works utilized by hand-crafted features that were designed for non-realistic action videos. Since the proposed technique is based on deep neural networks (DNN) in this section, we're going to review the related work that is based on DNN. In the last few years, various variations of deep learning models have been proposed to aid in human motion recognition in video and have shown remarkable performance in computing vision applications. GTS applied 3D convolutional kernels to video frames along a time axis, to capture both temporal and spatial information. Karpathy attempted applying CNNs for multiple images within each sequence , and derived the temporal connections through pooling using single late, early, and slow fusion. However the outcomes of this method were only marginally better than the results of a single frame baseline. Simonyan Zisserman and Zisserman utilized two-stream CNN framework in which both types of feature were used and one stream was using RGB images as the input, and the other utilizing pre-computed optical flows. Since optical flow only contains short-term motion information, it won't allow CNNs to identify longer-term motion-related transitions. The addition of a stream significantly increased the accuracy of motion recognition, which demonstrates the importance of motion-related features. Tran eluded the need for pre-computing optical flow functions via the 3D convolution (C3D) framework that allows deep networks to figure out temporal patterns in an end-to-end fashion. Yet, C3D only covers a limited interval in the order. GTS developed a temporal segment networks (TSN) structure, in which the sparse temporal sampling technique is used to describe long-term temporal patterns. investigate the various ways of mixing CNN towers to benefit from this spatial-temporal data from appearances and optical flow networks. The CNN-based method can only extract visual appearance attributes and doesn't have the capabilities of temporal modeling over long distances The CNN-based approach does not consider the fundamental difference between temporal and spatial domains, too.

Methodology

ACTIVITY RECOGNITION SYSTEM

Reviewing the latest technology of different behavioral recognition systems, and identifying the methodological approach of the tools we'll employ, and the expense of downloading both databases, let us are able to outline our plan. The system is comprised of two distinct models for DL, that is, a CNN scans video frames and extracts the features, while a DL model reads video frames, and then extracts features, while an RNN analyzes the features, and then predicts the action. The DL model is programmed using Python with it's Keras framework (using the Tensorflow framework as the backend). Prior to starting this training process the data must be processed so you can ensure the DL model is able to be fitted correctly.

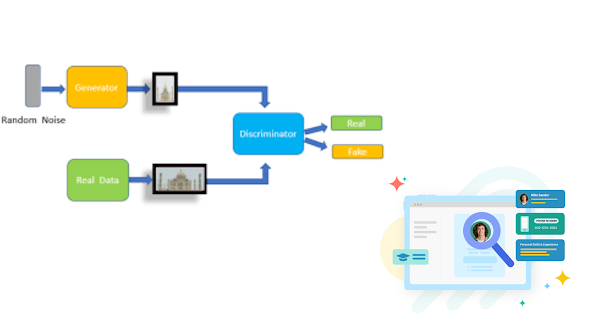

MODEL

The current state of the art in deep-learning activity recognition indicates that the most effective method to address this issue is to use a model using the use of a Convolutional Neural Network, at the beginning, in order to identify the specific features of video frames, then followed by the use of a Recurrent Neural Network that can create models of frames. There are various Behavior Recognition Models for DL, including the 3D CNN that utilizes the FC network. In this model, the entire video is sent directly to the 3D CNN simultaneously and the CNN is capable of obtaining not just image characteristics, however also motion as well as time characteristics. These features are put into a network made up of standard FCs. The issue with this approach is that predicting the action will require all the footage. But, the event is predicted even before the video is finished the video, and this method is more effective because it is able to determine the process during real time (early predictions).

CONVOLUTION PARTICULAR

This isn't an easy job to build the perfect 2D convolutional neural network that has high performance when it comes to understanding images and creating its characteristics (vector that summarizes the image's details). This is because of the difficulty of identifying a reliable model, as well as the huge volume of time and data needed to train it. In the end the most common approach for deep-learning is to include an already trained model to identify the key features, and transfer the features into the model. There are many models that have been pre-trained to recognize images. ImageNet is an online database that organizes an annual contest (ILSVRC) since 2010 testing object detection and classification algorithms. From this battle, a variety of DL models have been developed in the year 2012, including Alex. Net (2012), ZF Net (2013), VGG Net (2014), GoogLeNet (2014), Microsoft ResNet (2015). The models that use deep learning (since 2012) include two main blocks that are shared that is an image feature extraction tool utilizing convolutions, the number of layers and structure of the convolution layers that are model-determined. This second component is related to the classification process which is an inverse neural network that uses an image feature vector as its input and then classifies the type of object (the output size will depend on the quantity of objects that need to be classified). This second part is identical in a way to the (ILSVRC)challenge pattern. In this case we will utilize the extraction feature of a model that has been trained to transfer learning, which is focused on the storage of knowledge and its application to a specific , but closely related issue. The model we'll employ is Inception Version 3 due to its outstanding accuracy in classification and a the cost of computation is low. There are models that have better performance similar to Inception ResNet v2 but they contain layers that require more computing power and the increase in classification isn't that significant. One of the models that is available in Keras is Keras model is called the Inception version 3 Model (Figure 6, Figure 6). The method by how initiation is carried out will be as follows. Instead of creating an encircling Convolution pyramid (one behind the other) Inception uses what they call modules of Inception which are layers that the flow does not follow a sequential. Many convolutions of various dimensions are calculated separately within these modules and then merged into one row. This method allows for the extraction of additional features. Additionally, it makes use of the 1x1 convolutions to simplify the number of operations. Classification is the 2nd component of the Inception network that is created by fully connected layers and an output layer that is softmax. This type of classification technique is ideal for situations where we need to categorize an image in one go, however, if we want to categorize the image stream for example, like a photograph and video, then we require RNNs

CONCLUSIONS

The classification of video is troublesome because of numerous reasons, for example, shortage of video dataset, low accuracy etc. The main highlights of this paper are, data manipulation of distinct datasets to fit a Deep Learning model; transfer learning of a pretrained Deep Learning model (Inception V3) to our system; use of LSTM Recurrent Neural Networks. Since all day, every day manual checking of recordings is troublesome this framework could supplant such convention and analyze the footage with maximum accuracy. Video based online search, security surveillance, recognizing and removal of reuploaded copyright video are portions of its future scope. As future work we aim to increase the system accuracy by taking advantage of the diversity that datasets such Activity net offers to obtain an even more robust activity recognition system. A better model could be implemented by improving the fine tuning process, varying the number of layers, neurons, learning rate, etc.

.png)

Comments

Post a Comment